In the dynamic world of digital marketing, where trends and consumer behaviors are constantly evolving, the ability to adapt and optimize your strategies is paramount. This is where the art of A/B testing comes into play, serving as a powerful tool for marketers seeking to fine-tune their campaigns and maximize their ROI. A/B testing, often referred to as split testing, allows you to systematically compare two or more variations of a marketing element to determine which one resonates best with your audience. By delving into this methodical approach, we unlock the secrets to enhancing conversion rates, click-throughs, and engagement, ultimately catapulting our digital marketing efforts to new heights. Join us as we explore the fascinating world of A/B testing, uncovering its strategies, best practices, and how it can revolutionize your digital marketing game.

1. What is A/B testing?

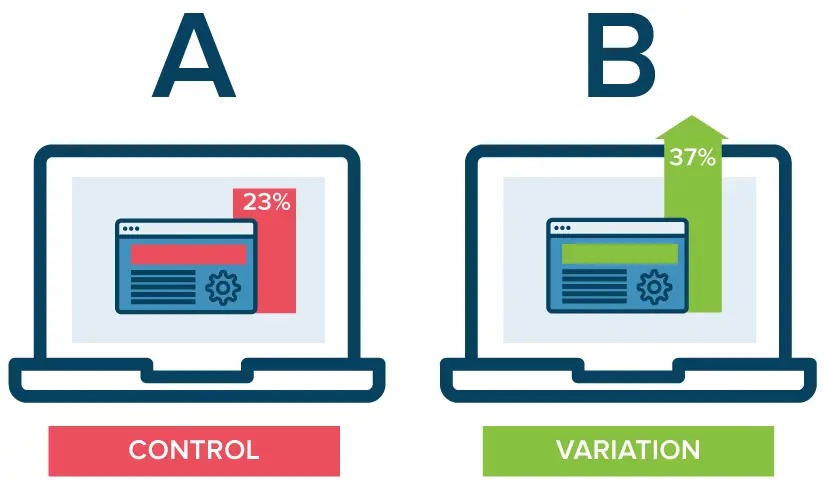

A/B testing, also known as split testing, is a systematic and data-driven method used to compare two versions of a web page, email, or any digital asset to determine which one performs better. It involves creating two versions, Version A (the control) and Version B (the variant), with a single differing element; this could be a headline, call-to-action button, image, or any other component. The aim is to identify which version leads to better user engagement, higher conversion rates, or other predefined goals.

2. The Science Behind A/B Testing

Behavioral psychology and decision-making

To truly understand A/B testing, we need to delve into the realm of behavioral psychology. Various factors, including emotions, cognitive biases, and environmental stimuli, influence human decision-making, making it a complex process. A/B testing leverages these factors to capture the essence of human behavior, helping marketers adapt their strategies accordingly. When a user encounters two different versions of a webpage or advertisement, their subconscious mind is making choices based on subtle differences. The goal of A/B testing is to decipher which version appeals more to the user’s psychology, ultimately leading to higher engagement and conversions.

Statistical Significance

One critical aspect of A/B testing is the need for statistical significance. To ensure that the results are reliable and not due to chance, a sufficient number of users must be exposed to both versions. Tools like Google Optimize, Optimize, or VWO (Visual Website Optimizer) help in accurately determining the statistical significance of your A/B tests.

3. Setting up an A/B test

Creating a successful A/B test involves several key steps:

Identify your objective

Before setting up the test, define your objectives clearly. Are you trying to increase click-through rates, reduce bounce rates, or boost product sales? Knowing your goals will guide the entire testing process.

Choose the variable to test.

Select the specific element you want to test. Common options include headlines, images, content layout, or call-to-action buttons.

Create Variations

Develop the control version (A) and the variant (B) with the differing elements. Ensure that only one element is changed to isolate the impact.

Randomly assign visitors

Using A/B testing software, visitors to your website or recipients of your email are randomly assigned to either Version A or Version B.

Collect Data

Monitor the user interactions and gather data over a predefined period. This data will be used for analysis.

4. Measuring and Analyzing the Results

Once your A/B test has run its course, it’s time to evaluate the results.

Conversion Rate

The primary metric you’ll analyze is the conversion rate, or the percentage of visitors who completed the desired action. This could be making a purchase, signing up for a newsletter, or any other relevant action.

Statistical Analysis

To determine whether the differences observed are statistically significant, use A/B testing tools to analyze the data. This helps ensure that the results are not due to random chance.

User Feedback

Consider gathering qualitative feedback through surveys or user testing to understand the “why” behind the quantitative results.

5. A/B Testing Best Practices

For a successful A/B testing campaign, consider these best practices:

Test one variable at a time

Test one variable at a time to identify the exact component influencing the behavior of the user. Testing several factors at once can skew the results.

Give it time

A/B testing requires a sufficient sample size and it’s duration to yield reliable results. Be patient, and avoid drawing conclusions too quickly.

Analyze both quantitative and qualitative data

Combine quantitative data, like conversion rates, with qualitative data, such as user feedback, to gain a holistic view of the user experience.

Continuously Optimize

A/B testing is not a one-time effort. Continuously optimize your digital assets based on the insights gained from testing.

6. The Impact of A/B Testing on SEO

While A/B testing is primarily associated with improving conversion rates and user experience, it also has a significant impact on SEO.

Page Speed

Google uses page speed as a ranking factor. A/B testing can help you identify and eliminate elements that slow down your website, leading to better search engine rankings.

User Experience

Google values the user experience highly. This can help in making user-centric improvements, which in turn can positively affect your SEO.

Content Relevance

It can reveal which content resonates best with your audience. By tailoring your content based on A/B test results, you can improve the relevance of your website, thereby boosting SEO.

Conclusion

In the world of digital marketing, A/B testing is an invaluable tool that allows businesses to refine their online strategies for maximum effectiveness. By leveraging the principles of behavioral psychology, statistical analysis, and user feedback, A/B testing empowers marketers to make data-driven decisions that lead to higher conversion rates, an improved user experience, and enhanced SEO.

for more details, https://emeritus.org/

FAQs

1. What are some common elements of the A/B test in email marketing?

Common elements of the A/B test in email marketing include subject lines, email copy, images, call-to-action buttons, and send times.

2. How long should an A/B test typically run?

The duration of an A/B test depends on factors like your website traffic. It’s recommended to run tests for at least one to two weeks to ensure statistical significance.

3. Can A/B testing be used for offline marketing campaigns?

While A/B testing is most commonly associated with digital marketing, it can also be applied to offline campaigns. For example, testing different versions of a print ad or direct mail piece can provide valuable insights into customer preferences.

for more informative blogs, https://sensiblelead.pk/